Vectorised Data Makes RAG Work Faster:

how IP teams turn documents into answersby Kacper Gorski - Head of GTM of Lighthouse IP

Retrieval-Augmented Generation works best when the inputs are clean.

Feed it pre-processed, vectorised data and it retrieves the right passages and drafts sourced answers quickly. Feed it raw PDFs and you push work to query time, which increases latency and cost. The practical takeaway is simple: prepare your data first.

RAG in 30 seconds

RAG has two steps:

- Retrieve relevant passages from your knowledge base.

- Ask an LLM to compose an answer that cites those passages.

Good retrieval plus grounded generation gives speed to insight with traceability.

What RAG actually enables for IP teams

Examples your stakeholders understand:

- Mine your own portfolio for technical solutions

Search every claim, figure, and design note to find mechanisms, materials, and parameter ranges you already disclosed. Surface reusable components and known work-arounds for current engineering problems. - Prior art and novelty scanning

Retrieve semantically similar claims and figures across languages and families, then generate a short brief with citations for attorney review. - Design-around ideation

Given a competitor claim element, retrieve nearby disclosures in your corpus and related art, then suggest candidate variations for engineering exploration. Treat these as prompts for humans, not legal advice. - FTO triage

Combine keyword filters with vector retrieval on claims and independent elements to assemble a first pass stack for counsel. The answer includes citations and confidence notes. - Prosecution support

Pull office actions, cited references, and claim histories to draft concise summaries of “what moved the examiner.” Helps with strategy prep and internal handovers. - Portfolio hygiene

Detect duplicates, stale families, and missing links between legal status, corporate trees, and valuation signals. Generate a to-fix list for data ops. - Competitive and partnership scouting

Retrieve clusters around a CPC class or a problem statement and highlight new assignees, inventors, or cross-overs into your domains. - Design and image look-alikes

Use image embeddings on design rights and patent figures to find visual similarity that keyword search misses.

These are starting points. Impact depends on corpus quality, evaluation method, and human workflow. I cannot verify results here; we share redacted benchmarks on request.

Why pre-processing beats raw text

Raw text pipelines often push parsing, cleaning, and embedding to query time. Pre-processing moves that work to ingestion.

- Vectorisation converts text, tables, and images into dense arrays so similarity becomes fast distance calculations.

- Heavy compute is cached. Embeddings are created once and refreshed on a schedule.

- Metadata matters. Attach assignee, families, IPC/CPC, dates, status, and ACLs to each chunk for precise filters.

- Hybrid retrieval helps. Many production stacks combine keyword search with vector search and then re-rank candidates.

Practical speed and quality benefits

- Lower latency at P50 and P95 because query time does less work.

- Better recall at k when chunking and metadata are consistent.

- Cleaner context windows so the model spends tokens on reasoning rather than boilerplate.

Claims vary by corpus and stack. If you need numbers, ask for our redacted before-after ranges.

How IP data fits the pipeline

- Bibliographic data powers filters and joins.

- Claims and full text are the main retrieval units.

- Citations and families improve recall and navigation.

- Legal status and events enable time-aware screening.

- Corporate trees normalise ownership for portfolio views.

- Design images and figures support visual similarity.

- Office actions and file wrappers add prosecution context.

- Valuation features support ranking and decision support.

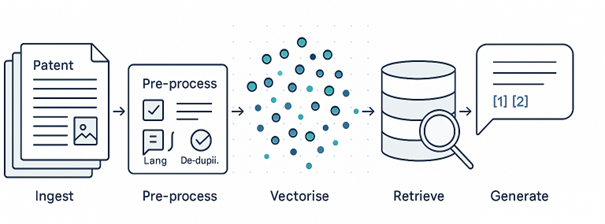

Minimal production architecture

- Ingest sources and normalise.

- OCR quality checks and language detection.

- Chunk consistently and embed text and images.

- Store vectors with metadata and access controls.

- Retrieve with filters using hybrid search.

- Re-rank and generate an answer with citations.

- Capture feedback and refresh embeddings on a schedule.

What to measure

- Latency bands: P50 and P95 for end-to-end queries.

- Retrieval quality: recall at k on a labelled set.

- Groundedness: citation correctness and answer coverage.

- Cost per 1k queries: serving, storage, and refresh.

- Freshness: time from source change to index update.

Getting started

- Define a single high-value workflow such as portfolio mining for solution reuse or FTO triage.

- Prepare a 50k to 200k document slice with strict data hygiene.

- Choose an embedding model that performs well on multilingual technical text and test it on your own eval set.

- Stand up hybrid retrieval with re-ranking.

- Ship a narrow pilot to real users and iterate on chunking, filters, and prompts.

Where Lighthouse IP helps

We supply pre-processed IP data across patents, designs, trademarks, legal events, corporate trees, and valuation features that are ready for retrieval and vectorisation. Samples are available for sandbox testing. I cannot verify client outcomes here; we provide redacted benchmarks on request.

Want a concise ingestion checklist and a sample of pre-vectorised patent claims with metadata? Send me an email and I will share it.

About the author Kacper Gorski - Head of GTM of Lighthouse IP

Kacper Gorski is Head of Go to Market at Lighthouse IP, where he leads commercial strategy and partnerships for the company’s global patent, trademark, and design data. He focuses on turning complex IP information into practical tools and services, working with law firms, corporates, and analytics partners to link IP data to real business decisions. Kacper is currently developing new AI and vector based services that make IP data more accessible and actionable for customers.